初识Core ML:建立一个简单的图片内容识别应用

Xcode 9 beta & iOS11 beta & Swift4

Core ML是一个能集成机器学习模型到app中的框架。

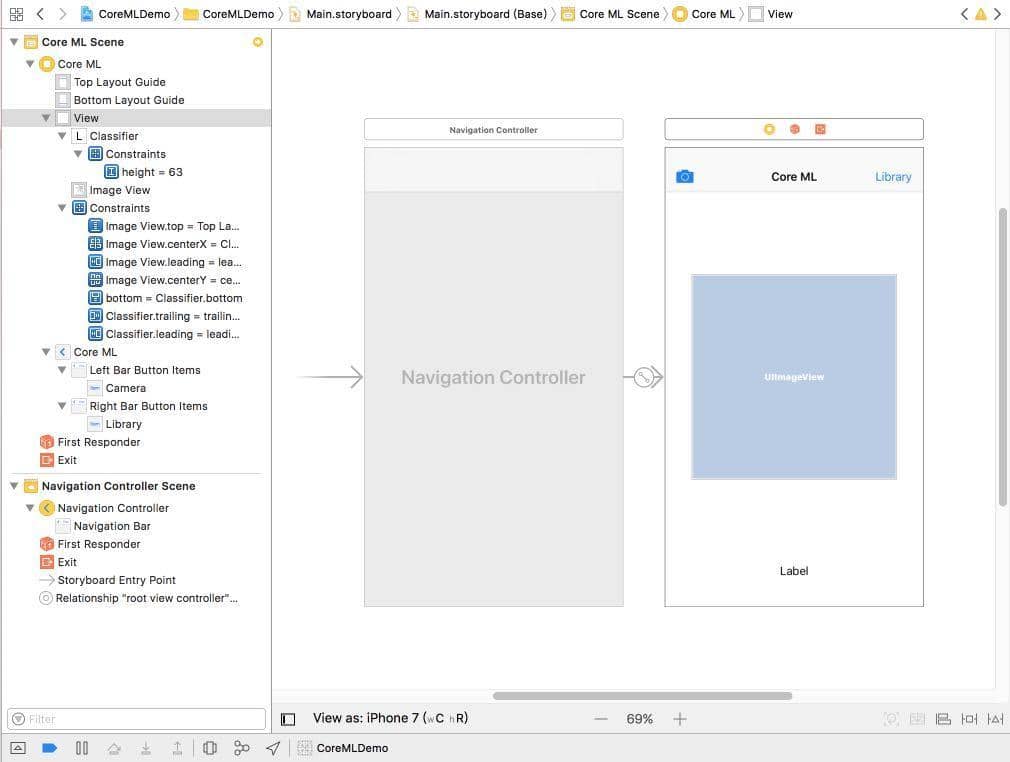

一、新建初始项目

- 新建项目CoreMLDemo,以single-view application template模板

- 建立如下如下UI

二、实现照相和获取图片库功能

-

遵守两个协议:

class ViewController: UIViewController, UINavigationControllerDelegate, UIImagePickerControllerDelegate { -

添加两个接口,并关联。

- 实现两个Action

|

|

- 实现协议

UIImagePickerControllerDelegate中的方法:

- 在

Info.plist中添加📷使用请求,Privacy – Camera Usage Description and Privacy

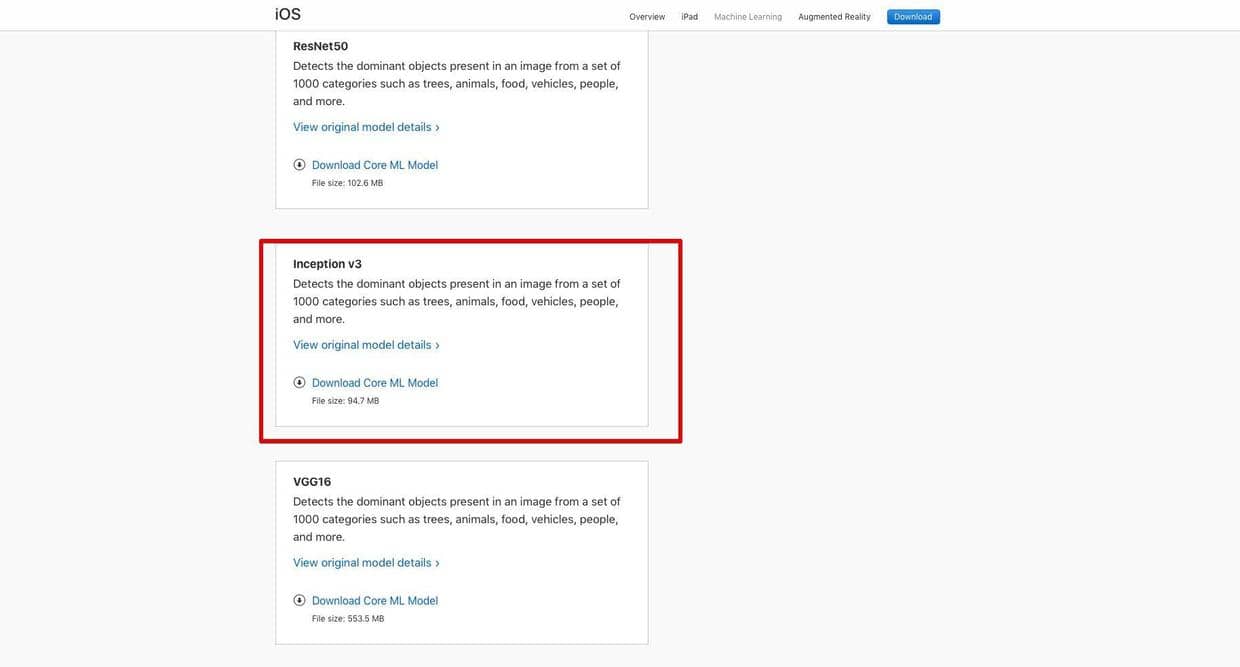

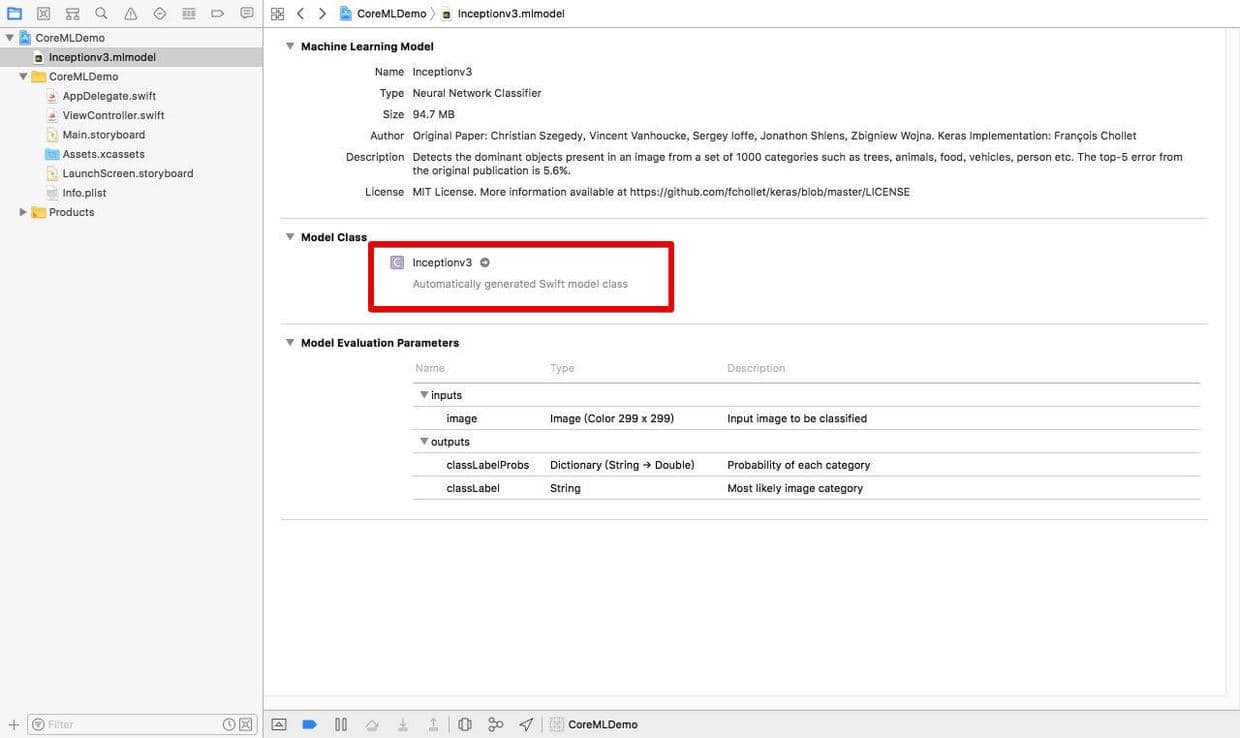

三、集成Core ML Data Model

-

到Apple机器学习官网 Machine Learning下载Core ML models,目前有6个Model,识别图片的使用

Inception v3。下载得到一个mlmodel结尾的文件,直接拖到项目中,会自动生成对应model名的swift的类文件,可以直接在代码中使用。

-

在

ViewController.swift中引入Core ML:import CoreML -

初始

Inceptionv3:

- 实现

UIImagePickerControllerDelegate协议的imagePickerController(_:didFinishPickingMediaWithInfo)方法:

|

|

- 使用Core ML

在上面

imagePickerController(_:didFinishPickingMediaWithInfo)方法最后添加:

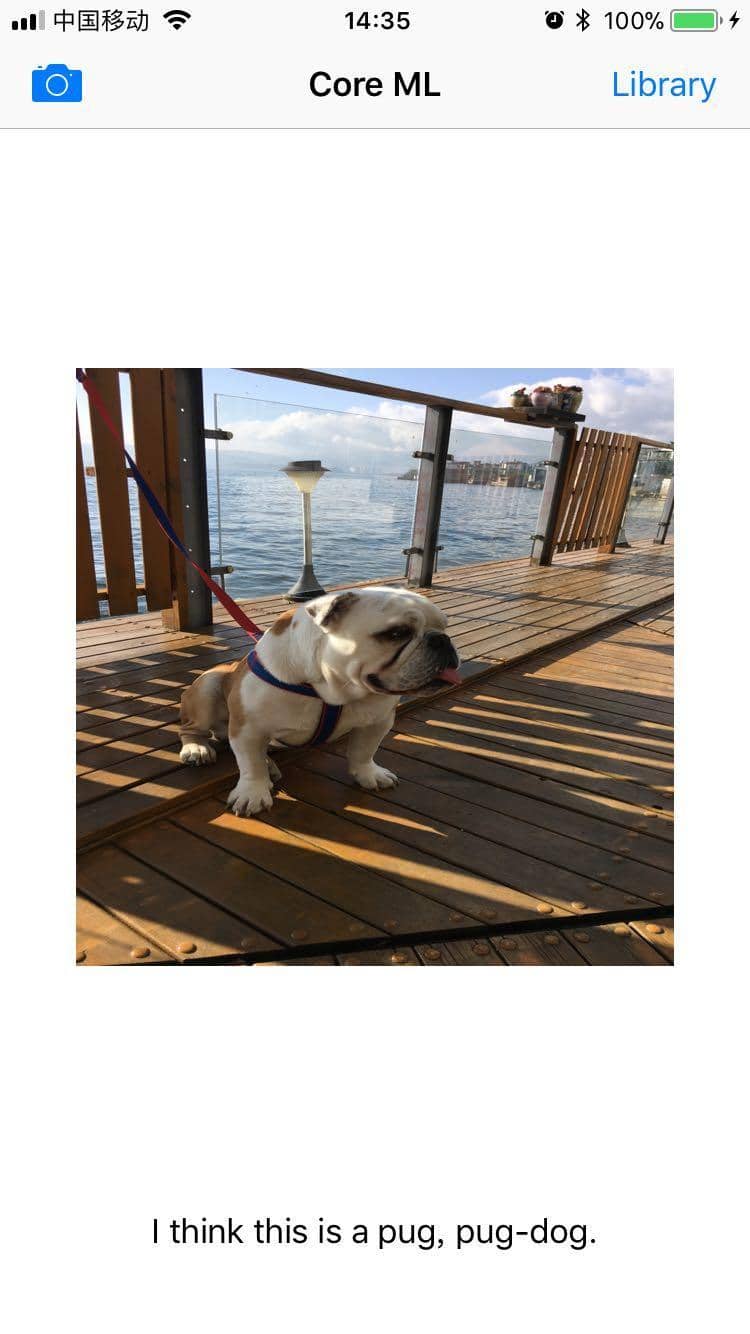

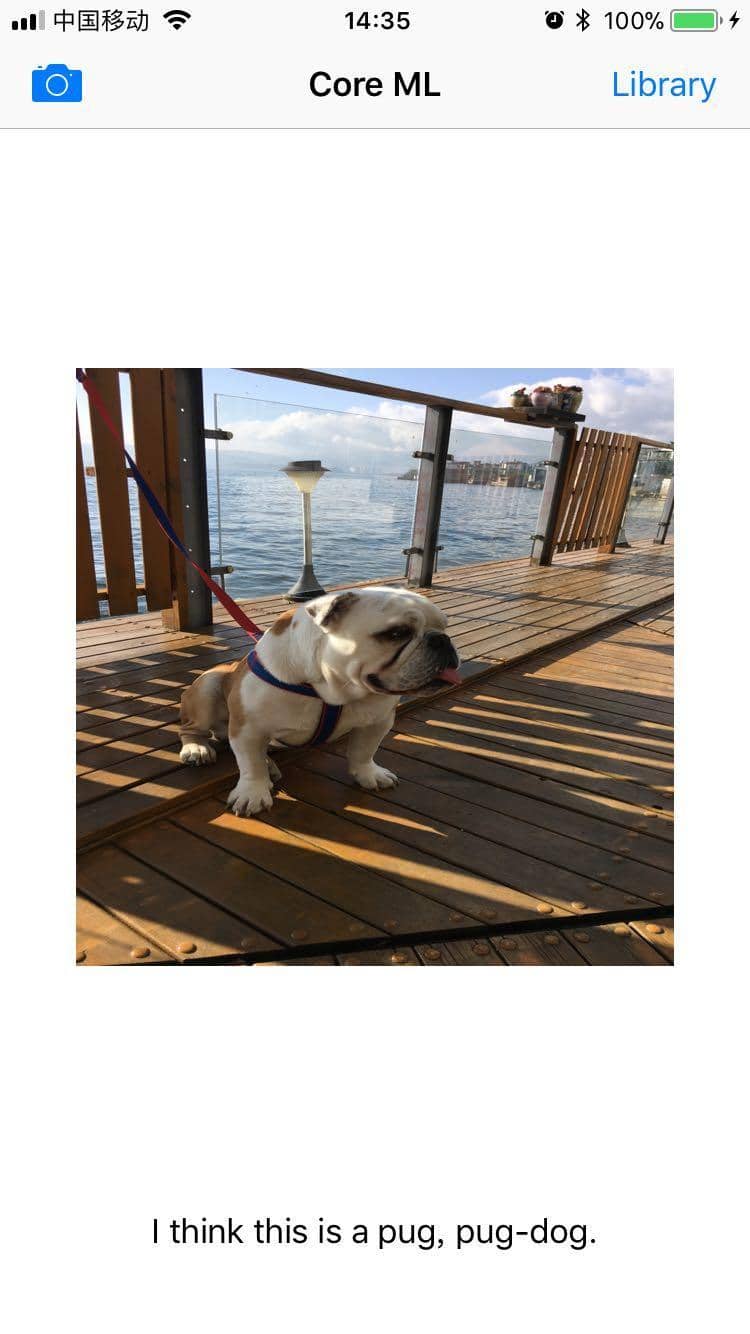

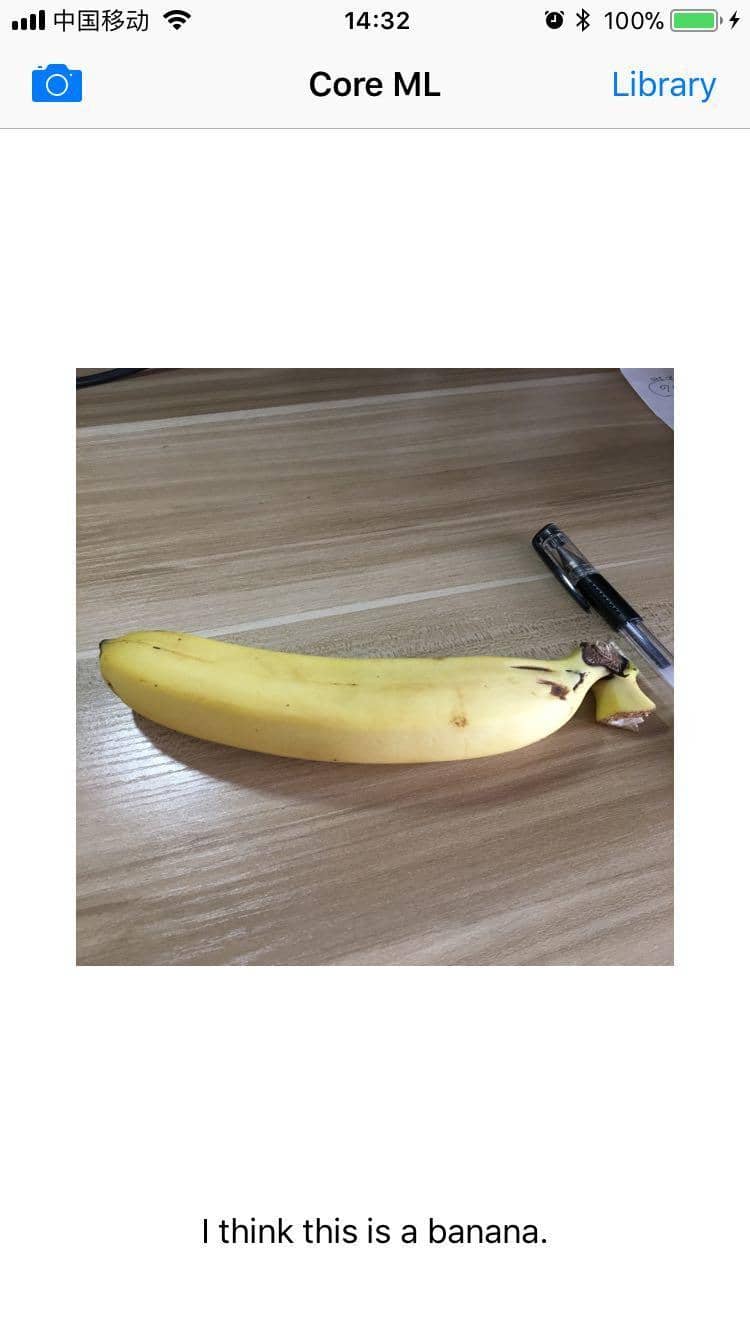

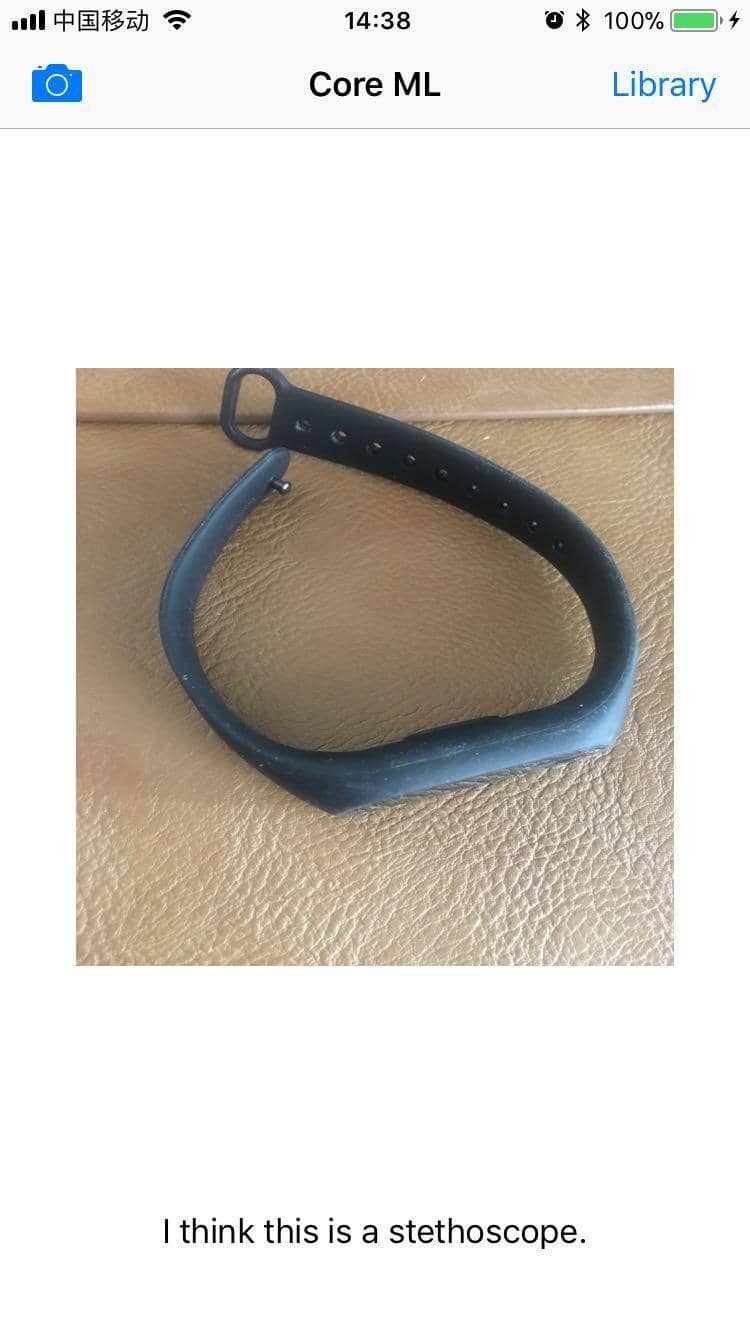

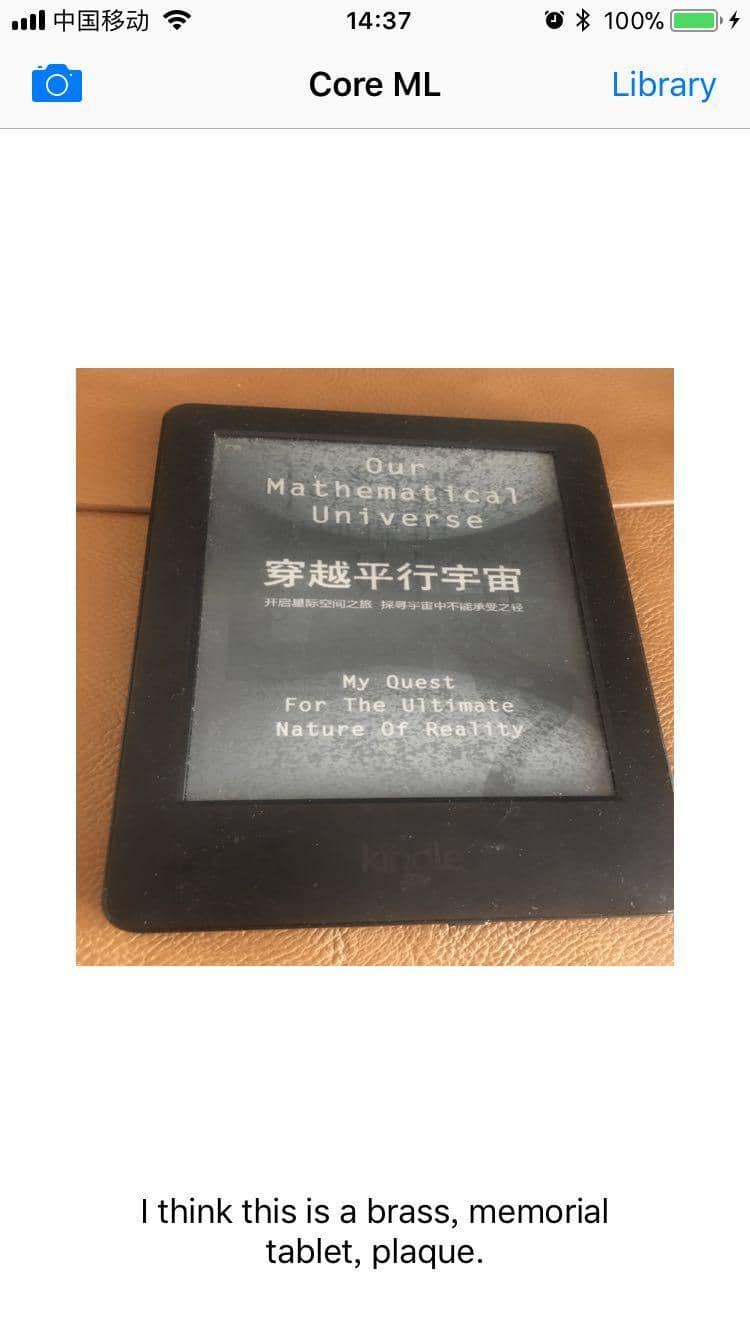

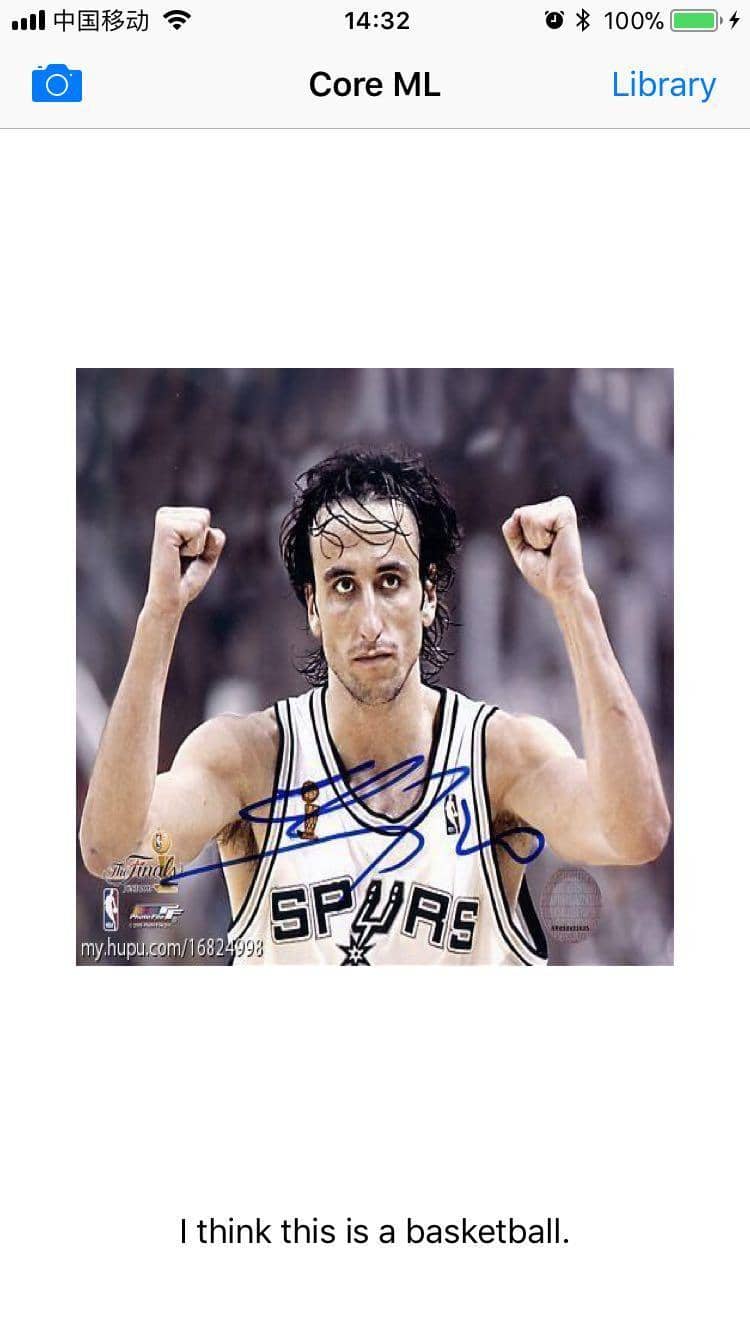

现在就可运行识别图片内容了,我已几个简单的做了实验,效果还可以:

能识别🐶的种类,哈巴狗pug

🍌应该相对而言简单:

朋友家的猫,我也不知道猫的种类,识别为波斯猫(Persian cat):

这个我就不知道是怎么识别为浓咖啡(espresso)的了🤔

当然识别相差比较奇怪的。

小米手环识别成听诊器(stethoscope)😢

kindle识别不出😢😢😢

四、Core ML学习资源

官方文档 official Core ML documentation

WWDC 2017:

代码 CoreMLDemo (由于

Inceptionv3.mlmodel比较大,我就没有上传到github上,直接到 Machine Learning然后拖到项目中即可)

参考:Introduction to Core ML: Building a Simple Image Recognition App

文章作者 andyron

上次更新 2024-07-16

许可协议 原创文章,如需转载请注明文章作者和出处。谢谢!